I was chasing down a Virtual Distributed Switch NetFlow support question the other day because a customer was wondering how our NetFlow Analyzer would handle the export. I finally took the time to figure out what a Virtual Distributed Switch (VDS) IP address is and how it relates to the NetFlow exporter. A VDS can be distributed such that physical network adapters from multiple ESX hosts (esx1, esx2, esx3, etc) can be part of the switch.

Virtual Distributed Switch NetFlow Support

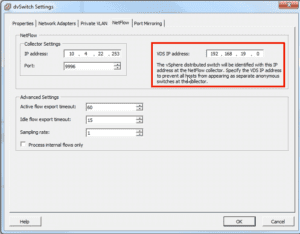

If you enable NetFlow on a VDS as shown above and assign an IP to the VDS, all flows originate from that IP, regardless of which ESX host the VM’s are on. You will have one exporter source IP. If you don’t assign an IP to the VDS, flows will be exported for VM’s using the management IP of the ESX host they currently reside on.

Multiple ESX Servers = 1 NetFlow Exporter

Let me explain it another way.

- If you have 4 ESX hosts each with 15 virtual machines and a “VDS IP address” is not assigned, you will see 4 exporters appear in your NetFlow Analyzer. The source IPs of those exporters will be the management IPs of the ESX hosts.

- If you have 4 ESX hosts each with 15 virtual machines and a “VDS IP address” is assigned, you will see 1 exporter appear in your NetFlow Analyzer. All flows regardless of how many ESX hosts are configured will be sent from the configured VDS IP Address.

Now, this is all fine and good but, as I read on in the VMware documentation, I came across a topic that isn’t discussed very often in the world of NetFlow and IPFIX. It is a topic that carries different names depending on the version of NetFlow that is being exported.

Engine ID Vs Source ID Vs Observation Domain ID

Simply put: Engine ID is a NetFlow v5,v8 thing. Source ID is a NetFlow v9 thing and Observation Domain ID is an IPFIX thing. My guess is that you want to learn more, so read on.

- Engine ID: (NetFlow v5 & v8) VIP or LC slot number of the flow switching engine.

- Source ID: (NetFlow v9) This field is a 32-bit value that is used to guarantee uniqueness for all flows exported from a particular device. (The Source ID field is the equivalent of the engine type and engine ID fields found in the NetFlow Version 5 and Version 8 headers). The format of this field is vendor specific. In Cisco’s implementation, the first two bytes are reserved for future expansion, and will always be zero. Byte 3 provides uniqueness with respect to the routing engine on the exporting device. Byte 4 provides uniqueness with respect to the particular line card or Versatile Interface Processor on the exporting device. Collector devices should use the combination of the source IP address plus the Source ID field to associate an incoming NetFlow export packet with a unique instance of NetFlow on a particular device.

- Observation Domain ID: (IPFIX) An identifier of an Observation Domain that is locally unique to an Exporting Process. The Exporting Process uses the Observation Domain ID to uniquely identify to the Collecting Process the Observation Domain where Flows were metered. It is RECOMMENDED that this identifier is also unique per IPFIX Device. A value of 0 indicates that no specific Observation Domain is identified by this Information Element. Typically, this Information Element is used for limiting the scope of other Information Elements.

We were one of the first vendors to enter the NetFlow, sFlow and IPFIX market and because of this, I remember way back in ESX Server v3.5 beta, when only NetFlow was supported. ESX Server v4 didn’t support NetFlow or IPFIX and in ESX Server v5 – IPFIX was introduced. Consequently, VMware has this to say in the ESX v3.5 documentation: “NetFlow on ESXServer embeds the virtual switch ID into the engineType and engineID fields of the header of each NetFlow export packet. (Flows from different virtual switches are always sent in separate packets.) Most collectors ignore these fields. In this case, interfaces on different virtual switches that have the same local ID are merged or aggregated into a single interface by the collector, mixing their flows together. If your collector shows this behavior, you can use this option to change the way source portIDs and destination portIDs are encoded.”

VMware Today Supports IPFIX

Then, along comes ESX Server v5 with IPFIX support. First of all, VMware switched from NetFlow to IPFIX for probably several reasons:

- NetFlow is proprietary to Cisco just as sFlow is proprietary to Inmon.

- IPFIX allows for unique vendor support, NetFlow doesn’t although, Cisco did pass out ranges of elements to several vendors. This empowers vendors to export unique details not defined in the standards.

- IPFIX allows for variable length strings (E.g. export URL details).

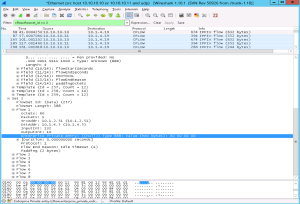

All of this is fine and good except that in the VMware v5.0 and v5.1 IPFIX packet capture, we saw something that caught our eyes in the IPFIX template regarding the Observation Domain ID: 0_888.

We tried both assigning a VDS IP address and not assigning one and in both v5.0 and v5.1 the Observation Domain didn’t change. In other words, it is always 0, regardless of whether the VDS IP address is set or not.

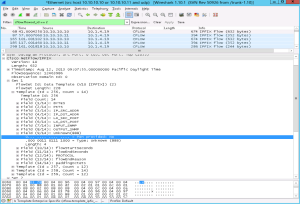

You’ll notice in the above Wireshark screen capture that at the very bottom (highlighted), in hex the value of the observation Domain ID: 0 is a 4 byte field “00 00 00 00”. The IPFIX template told us that this 4 byte field has an element ID of 0_888 (not shown above) which could be interpreted as 0.0.0.0.

If VMware is making reference to the IANA defined observationDomainId, the element should be 149, not 888.

NOTE: I could be wrong in my assumption but, I’ll digress for software developers, it is worth learning about.

If it was VMware’s desire to assign 888 as a proprietary element, then the preceding ‘0’ should have been assigned the VMware PEN = 6876 . In other words, the way we understand IPFIX construction, the value should have been 0_149 or 6876_888. As 0_888 appears to be some sort of a mish mash of proper element ID formatting.

September 17, 2015 Update: VMware has fixed the above issue and is now exporting 6876_888. If you are still seeing 0_888, you need to upgrade.